This post was authored by Brian Dipert, editor-in-chief at the Embedded Vision Alliance.

In order for manufacturing robots and other industrial automation systems to meaningfully interact with the objects they are assembling, as well as to deftly and safely move about in their environments, they must be able to see and understand their surroundings. Cost-effective and capable vision processors, fed by depth-discerning image sensors and running robust software algorithms, are transforming longstanding autonomous and adaptive industrial automation aspirations into reality.

Automated systems in manufacturing line environments are capable of working more tirelessly, faster, and more exactly than humans. However, their success has traditionally been predicated on incoming parts arriving in fixed orientations and locations, thereby increasing manufacturing process complexity. Any deviation in part position or orientation causes assembly failures. Humans use their eyes (along with other senses) and brains to understand and navigate through the world around them. Robots and other industrial automation systems should be able to do the same thing. They can leverage camera assemblies, vision processors, and various software algorithms in order to skillfully adapt to evolving manufacturing line circumstances, as well as to extend vision processing’s benefits to other areas of the supply chain, such as piece parts and finished goods inventory tracking.

Historically, such vision-augmented technology has typically been found only in a few complex, expensive systems. However, cost, performance, and power consumption advances in digital integrated circuits are now paving the way for the proliferation of vision into diverse mainstream automated manufacturing platforms. Implementation challenges remain, but they are more easily, rapidly, and cost effectively solved than has ever before been possible. And an industry alliance comprised of leading product and service suppliers is a key factor in this burgeoning technology success story.

The phrase “real-time” can potentially mean rapidly evaluating dozens of items per second. To meet the application’s real-time requirements, various tasks must often run in parallel. On-the-fly quality checks can be used to spot damaged material and to automatically update an inventory database with information about each object and details of any quality issues. Vision systems for inventory tracking and management can deliver robust capabilities without exceeding acceptable infrastructure costs, by integrating multiple complex real-time video analytics extracted from a single video stream.

Inventory tracking

Embedded vision innovations can help improve product tracking through production lines and with enhanced storage efficiency. While bar codes and radio-frequency identification tags can also help track and route materials, they cannot be used to detect damaged or flawed goods. Intelligent raw material and product tracking and handling in the era of embedded vision will be the foundation for the next generation of inventory management systems, as image sensor technologies continue to mature and as other vision processing components become increasingly integrated. High-resolution cameras can already provide detailed images of work material and inventory tags, but complex, real-time software is needed to analyze the images, to identify objects within them, to identify ID tags associated with these objects, and to perform quality checks.

A pharmaceutical packaging line uses vision-guided robots to quickly pick syringes from conveyer belts and place them into packages.

A pharmaceutical packaging line uses vision-guided robots to quickly pick syringes from conveyer belts and place them into packages.

The phrase “real-time” can potentially mean rapidly evaluating dozens of items per second. To meet the application’s real-time requirements, various tasks must often run in parallel. On-the-fly quality checks can be used to spot damaged material and to automatically update an inventory database with information about each object and details of any quality issues. Vision systems for inventory tracking and management can deliver robust capabilities without exceeding acceptable infrastructure costs, by integrating multiple complex real-time video analytics extracted from a single video stream.

Automated assembly

Embedded vision is a key enabling technology for the factory production floor in areas such as raw materials handling and assembly. Cameras find use in acquiring images of, for example, parts or destinations. Subsequent vision processing sends data to a robot, enabling it to perform functions such as picking up and placing a component. As previously mentioned, industrial robots inherently deliver automation benefits such as scalability and repeatability. Adding vision processing to the mix allows these machines to be far more flexible. The same robot can be used with a variety of parts, because it can see which particular part it is dealing with and adapt accordingly.

Three-dimensional imaging is used to measure the shape of a cookie and inspect it for defects.

Three-dimensional imaging is used to measure the shape of a cookie and inspect it for defects.

Factories can also use vision in applications that require high-precision assembly; cameras can “image” components after they are picked up, with slight corrections in the robot position made to compensate for mechanical imperfections and varying grasping locations. Picking parts from a bin also becomes easier. A camera can be used to locate a particular part with an orientation that can be handled by the robotic arm, within a pile of parts.

Depth-discerning 3-D vision is a growing trend that can help robots perceive even more about their environments. Cost-effective 3-D vision is now appearing in a variety of applications, from vision-guided robotic bin picking to high-precision manufacturing metrology. Latest-generation vision processors can now adeptly handle the immense data sets and sophisticated algorithms required to extract depth information and rapidly make decisions. Three-dimensional imaging is enabling vision processing tasks that were previously impossible with traditional 2-D cameras. Depth information can be used, for example, to guide robots in picking up parts that are disorganized in a container.

Automated inspection

An added benefit of using vision for robotic guidance is that the same images can also be used for in-line inspection of the parts being handled. In this way, not only are robots more flexible, they can produce higher-quality results. This outcome can also be accomplished at lower cost, because the vision system can detect, predict, and prevent “jam” and other undesirable outcomes. If a high degree of accuracy is needed within the robot’s motion, a technique called visual servo controlcan be used. The camera is either fixed to or nearby the robot and gives continuous visual feedback (versus only a single image at the beginning of the task) to enable the robot controller to correct for small errors in movement.

Beyond robotics, vision has many uses and delivers many benefits in automated inspection. It performs tasks such as checking for the presence of components, reading text and bar codes, measuring dimensions and alignment, and locating defects and patterns. Historically, quality assurance was often performed by randomly selecting samples from the production line for manual inspection, and then using statistical analysis to extrapolate the results to the larger manufacturing run. This approach leaves unacceptable room for defective parts to cause jams in machines further down the manufacturing line or for defective products to be shipped. Automated inspection, on the other hand, can provide 100 percent quality assurance. And with recent advancements in vision processing performance, automated visual inspection is frequently no longer the limiting factor in manufacturing throughput.

The vision system is just one piece of a multistep puzzle and must be synchronized with other equipment and input/output protocols to work well within an application. A common inspection scenario involves separating faulty parts from correct ones as they transition through the production line. These parts move along a conveyer belt with a known distance between the camera and the ejector location that removes faulty parts. As the parts migrate, their individual locations must be tracked and correlated with the image analysis results, in order to ensure that the ejector correctly sorts out failures.

Production and assembly applications, such as the system in this winery, need to synchronize a sorting system with the visual inspection process.

Production and assembly applications, such as the system in this winery, need to synchronize a sorting system with the visual inspection process.

Multiple methods exist for synchronizing the sorting process with the vision system, such as time stamps with known delays and proximity sensors that also keep track of the number of parts that pass by. However, the most common method relies on encoders. When a part passes by the inspection point, a proximity sensor detects its presence and triggers the camera. After a known encoder count, the ejector will sort the part based on the results of the image analysis.

The challenge with this technique is that the system processor must constantly track the encoder value and proximity sensors while simultaneously running image-processing algorithms, in order to classify the parts and communicate with the ejection system. This multifunction juggling can lead to a complex software architecture, add considerable amounts of latency and jitter, increase the risk of inaccuracy, and decrease throughput. High-performance processors, such as field programmable gate arrays, are now being used to solve this challenge by providing a hardware-timed method of tightly synchronizing inputs and outputs with vision inspection results.

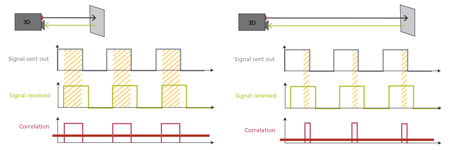

Varying sent-to-received delays correlate to varying distances between a time-of-flight sensor and portions of an object or scene.

Varying sent-to-received delays correlate to varying distances between a time-of-flight sensor and portions of an object or scene.

Workplace safety

Humans are still a key aspect of the modern automated manufacturing environment, adding their flexibility to adjust processes “on the fly.” They need to cooperate with robots, which are no longer confined in cages but share the work space with their human coworkers. Industrial safety in this context is a big challenge, since increased flexibility and safety objectives can be contradictory. A system deployed in a shared work space needs to have a higher level of perception of surrounding objects, such as other robots, work pieces, and human beings.

Three-dimensional cameras help create a reliable map of the environment around the robot. This capability allows for robust detection of people in safety and warning zones, enabling adaptation of movement trajectories and speeds for cooperation purposes, as well as collision avoidance. Vision-based safety advanced driver assistance systems are already widely deployed in automobiles, and the first vision-based industrial automation safety products are now entering the market. They aim to offer a smart and flexible approach to machine safety, necessary for reshaping factory automation.

Depth sensing

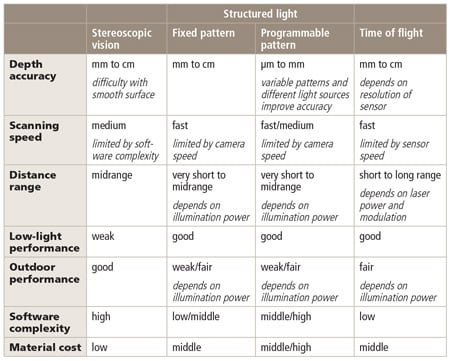

As already mentioned, 3-D cameras can deliver notable advantages over their 2-D precursors in manufacturing environments. Several depth sensor technology alternatives exist, each with strengths, shortcomings, and common use cases (table 1 and reference 1). Stereoscopic vision, combining two 2-D image sensors, is currently the most common 3-D sensor approach. Passive (i.e., relying solely on ambient light) range determination via stereoscopic vision uses the disparity in viewpoints between a pair of near-identical cameras to measure the distance to a subject of interest. In this approach, the centers of perspective of the two cameras are separated by a baseline or inter-pupillary distance to generate the parallax necessary for depth measurement.

Table 1. Three-dimensional vision sensor technology comparisons

Table 1. Three-dimensional vision sensor technology comparisons

Microsoft’s Kinect is today’s best-known structured light-based 3-D sensor. The structured light approach, like the time-of-flight technique to be discussed next, is an example of an active scanner, because it generates its own electromagnetic radiation and analyzes the reflection of this radiation from the object. Structured light projects a set of patterns onto an object, capturing the resulting image with an offset image sensor. Similar to stereoscopic vision techniques, this approach takes advantage of the known camera-to-projector separation to locate a specific point between them and compute the depth with triangulation algorithms. Thus, image processing and triangulation algorithms convert the distortion of the projected patterns, caused by surface roughness, into 3-D information.

An indirect time-of-flight (ToF) system obtains travel-time information by measuring the delay or phase-shift of a modulated optical signal for all pixels in the scene. Generally, this optical signal is situated in the near-infrared portion of the spectrum so as not to disturb human vision. The ToF sensor in the system consists of an array of pixels, where each pixel is capable of determining the distance to the scene. Each pixel measures the delay of the received optical signal with respect to the sent signal. A correlation function is performed in each pixel, followed by averaging or integration. The resulting correlation value then represents the travel time or delay. Since all pixels obtain this value simultaneously, “snap-shot” 3-D imaging is possible.

Vision processing

Vision algorithms typically require high computing performance. And unlike many other applications, where standards mean that there is strong commonality among algorithms used by different equipment designers, no such standards that constrain algorithm choice exist in vision applications. On the contrary, there are often many approaches to choose from to solve a particular vision problem. Therefore, vision algorithms are very diverse, and tend to change fairly rapidly over time. And, of course, industrial automation systems are usually required to fit into tight cost and power consumption envelopes.

Achieving the combination of high performance, low cost, low power, and programmability is challenging (reference 2). Special-purpose hardware typically achieves high performance at low cost, but with little programmability. General-purpose CPUs provide programmability, but with weak performance, poor cost effectiveness, or low energy efficiency. Demanding vision processing applications most often use a combination of processing elements, which might include, for example:

- a general-purpose CPU for heuristics, complex decision making, network access, user interface, storage management, and overall control

- a high-performance digital signal processor for real-time, moderate-rate processing with moderately complex algorithms

- one or more highly parallel engines for pixel-rate processing with simple algorithms

Although any processor can in theory be used for vision processing in industrial automation systems, the most promising types today are the:

- high-performance CPU

- graphics processing unit with a CPU

- digital signal processor with accelerator(s) and a CPU

- field programmable gate arrays with a CPU

The Embedded Vision Alliance

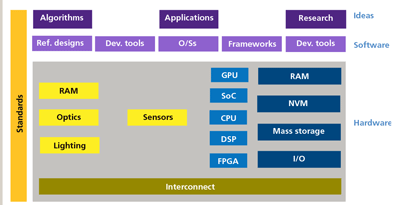

The rapidly expanding use of vision technology in industrial automation is part of a much larger trend. From consumer electronics to automotive safety systems, today we see vision technology enabling a wide range of products that are more intelligent and responsive than before, and thus more valuable to users. We use the term “embedded vision” to refer to this growing practical use of vision technology in embedded systems, mobile devices, special-purpose PCs, and the cloud, with industrial automation being one showcase application.

Embedded vision can add valuable capabilities to existing products, such as the vision-enhanced industrial automation systems discussed in this article. It can also provide significant new markets for hardware, software, and semiconductor manufacturers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform this potential into reality. Aptina Imaging, Bluetechnix, National Instruments, SoftKinetic, Texas Instruments, and Xilinx, the co-authors of this article, are members of the Embedded Vision Alliance.

The Alliance’s mission is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into new and existing products. To execute this mission, the Alliance maintains a website (www.embedded-vision.com) with tutorial articles, videos, code downloads, and a discussion forum staffed by technology experts. Registered website users can also receive the Alliance’s twice-monthly email newsletter (www.embeddedvisioninsights.com), among other benefits.

In addition, the Embedded Vision Alliance offers a free online training facility for embedded vision product developers: the Embedded Vision Academy (www.embeddedvisionacademy.com). This area of the Alliance website provides in-depth technical training and other resources to help engineers integrate visual intelligence into next-generation embedded and consumer devices. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image preprocessing, image sensor interfaces, and software development techniques and tools, such as OpenCV. Access is free to all through a simple registration process (www.embedded-vision.com/user/register).

The embedded vision ecosystem spans hardware, semiconductor, and software component suppliers, subsystem developers, systems integrators, and end users, along with the fundamental research that makes ongoing breakthroughs.

The organization has an annual Embedded Vision Summit (www.embeddedvisionsummit.com) with technical educational forums for engineers interested in incorporating visual intelligence into electronic systems and software. The summit has how-to presentations, keynote talks, demonstrations, and opportunities to interact with technical experts from Alliance member companies. The goal is to inspire engineers’ imaginations about the potential applications for embedded vision technology, offer practical know-how, and provide opportunities for engineers to meet and talk with leading embedded vision technology companies.

Contributors (members of Embedded Vision Alliance)

Michael Brading is chief technical officer of the Automotive Industrial and Medical business unit at Aptina Imaging. He has a B.S. in communication engineering from the University of Plymouth.

Tim Droz heads the SoftKinetic U.S. organization, delivering 3-D ToF and gesture solutions to international customers, such as Intel and Texas Instruments. Droz earned a BSEE from the University of Virginia, and a M.S. in electrical and computer engineering from North Carolina State University.

Pedro Gelabert is a senior member of the technical staff and a systems engineer at Texas Instruments. He received his B.S. and Ph.D. in electrical engineering from the Georgia Institute of Technology. He is a member of the Institute of Electrical and Electronics Engineers, holds four patents, and has published more than 40 papers, articles, user guides, and application notes.

Carlton Heard is a product manager at National Instruments, responsible for vision hardware and software. Heard has a bachelor’s degree in aerospace and mechanical engineering from Oklahoma State University.

Yvonne Lin is the marketing manager for medical and industrial imaging at Xilinx. Lin holds a bachelor’s degree in electrical engineering from the University of Toronto.

Thomas Maier is a sales and field application engineer at Bluetechnix and has been working on embedded systems for more than 10 years, particularly on various embedded image processing applications on digital signal processor architectures. After completing the Institution of Higher Education at Klagenfurt, Austria, in the area of telecommunications and electronics, he studied at the Vienna University of Technology. Maier has been at Bluetechnix since 2008.

Manjunath Somayaji is the Imaging Systems Group manager at Aptina Imaging, where he leads algorithm development efforts on novel multi-aperture/array-camera platforms. He received his M.S. and Ph.D. from Southern Methodist University (SMU) and his B.E. from the University of Mysore, all in electrical engineering. He was formerly a research assistant professor in SMU’s electrical engineering department. Prior to SMU, he worked at OmniVision-CDM Optics as a senior systems engineer.

Daniël Van Nieuwenhove is the chief technical officer at SoftKinetic. He received an engineering degree in electronics with great distinction at the VUB (Free University of Brussels) in 2002. Van Nieuwenhove holds multiple patents and is the author of several scientific papers. In 2009, he obtained a Ph.D. on CMOS circuits and devices for 3-D time-of-flight imagers. As co-founder of Optrima, he brought its proprietary 3-D CMOS time-of-flight sensors and imagers to market.

About the Author Brian Dipert is editor-in-chief at the Embedded Vision Alliance. He is also a senior analyst at Berkeley Design Technology, Inc., and editor-in-chief of InsideDSP, the company’s online newsletter dedicated to digital signal processing technology. Dipert has a B.S. in electrical engineering from Purdue University. His professional career began at Magnavox Electronics Systems in Fort Wayne, Ind. Dipert subsequently spent eight years at Intel Corporation in Folsom, Calif. He then spent 14 years at EDN Magazine.

Brian Dipert is editor-in-chief at the Embedded Vision Alliance. He is also a senior analyst at Berkeley Design Technology, Inc., and editor-in-chief of InsideDSP, the company’s online newsletter dedicated to digital signal processing technology. Dipert has a B.S. in electrical engineering from Purdue University. His professional career began at Magnavox Electronics Systems in Fort Wayne, Ind. Dipert subsequently spent eight years at Intel Corporation in Folsom, Calif. He then spent 14 years at EDN Magazine.

A version of this article also was published at InTech magazine.