This guest blog post is part of a series written by Edward J. Farmer, PE, ISA Fellow and author of the new ISA book Detecting Leaks in Pipelines.

Sometimes when I get wrapped up in automation issues hordes of observation and analysis equipment flood my mind. New visions promote change which motivates new ways of seeing and doing things.

In Europe, World War II military operations shifted from the mostly static trench warfare of World War I to high mobility. In World War I the “big thing” was impenetrable static defense; but the “next big thing” was the fully mechanized division that could reach a critical point and prevail at the critical moment.

Communications became dependent on go-anywhere mobile technology, which relied on encryption for security; mostly provided by radio operators using Morse code. That spawned a new field called cryptography which, in retrospect, was shockingly similar in mission and prosecution to concepts we now associate with automation science; both stemming from automation research concepts.

The theory was that even when encrypted, “it” is in there and just like secrets of nature, can be found and unraveled with the right methodology. I’ve always loved that quest: The Army taught me cryptography and college taught me process control. Eventually, I was imbued with fascination about how we could learn those things hiding just beyond our ability to perceive them. Keep in mind, the answer to secrets we hope to unravel are “in there,” installed by humans in one case and by nature in the other.

Cryptography is a good way to enhance understanding of the more esoteric portions of this journey – it is clever and has philosophically discussable difference with what is stochastic and what is deterministic. When we see evidence of the mind and fingerprints of mankind we are on the road to some deterministic outcome while “randomicity” or some impenetrable illusion of it suggests mystery still beyond our capability.

The Axis powers used an encryption machine called the Enigma machine. It was a letter-substitution approach but the connection between the clear-text letter and the encrypted one was not as simple as the ancient codes that used some hopefully secret algorithm to accomplish the same sort of thing.

The Enigma machine changed the linkage between the input and output letter each time a letter was encrypted. A string of “Zs” for example, would not produce the same coded letter each time. In fact (and depending on the design of a specific machine) it could take half a million letters before a pattern might emerge.

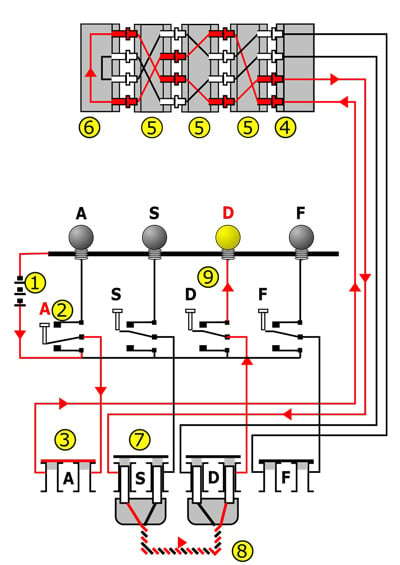

Enigma wiring diagram with arrows and the numbers 1 to 9 showing how current flows from key depression to a lamp being lit. The A key is encoded to the D lamp. D yields A, but A never yields A; this property was due to a patented feature unique to the Enigmas, and could be exploited by cryptanalysts in some situations.

Enigma wiring diagram with arrows and the numbers 1 to 9 showing how current flows from key depression to a lamp being lit. The A key is encoded to the D lamp. D yields A, but A never yields A; this property was due to a patented feature unique to the Enigmas, and could be exploited by cryptanalysts in some situations.

What’s more, this sequence of redundant encryptions of “Z” would not always begin with the same encrypted letter. The sequence would begin depending on the settings of a three-letter starting code and proceed for perhaps a half-million letter encryptions before any hint of a pattern might become evident. Seemingly, if the message length did not exceed a half-million letters it would appear random and hence undecipherable. If this were one of nature’s secrets it would appear stochastic – hopelessly indecipherable.

Somehow, early in the war, the mystery lifted when a brilliant British fellow named Alan Turing, working with a team of outstanding peers, unraveled the Enigma code to the extent that Allied commanders were able to read Enigma messages more quickly than the intended Axis recipient. The early visions of modern computer theory were developed and manifested by Colossus, the most powerful and versatile programmable computer of its day.

All that, though stemmed from the realization that portions of a project may be stochastic and other portions deterministic. There is always a way, with enough time and effort to unravel the deterministic parts and once you do the only obfuscation remains in the seemingly stochastic portions. Finding a way through some modest level of pseudo-randomness is a lot easier than confronting the situation as though it was entirely stochastic. Turing’s efforts with Enigma illustrate the analytical power of insightfully dividing the problem and conquering it piece by piece.

One can, and many have, observed that a man-made process intended to appear random was created by the mind of a man according to some set of rules: an algorithm or “recipe.” The product is, in effect, a pseudorandom process and can be unraveled by investigating the “pseudo” part of the “random.”

In this case, near-complete determinism emerged when some Polish mathematicians set out to unravel the Enigma machine during their early encounters before World War II. Their work made it to England and hence to Bletchley Park before Britain entered the war. Essentially, most of encryption was done by three (sometimes four) mechanical rings selected from a small set of choices. They interchanged an “input” letter for a different “output” letter by means of physical wiring within the rings. Each of the rings and the path between them, was deterministic, only the starting position was chosen, presumably randomly, by the user.

There are some other interesting features but for the present the rotors illustrate the core issue. Essentially, once the Poles had the wiring of the rotors, all they needed to know was which rotors, and in what order, were installed, and what the starting position of each was. That starting position was significant. On a three-rotor Enigma with 26 letters there were nearly 18,000 possibilities. While trying each of them might trigger the production of something that looked like clear-text that was a lot of button-pushing in those days.

If you would like more information on how to purchase Detecting Leaks in Pipelines, click this link.

This was a motivation for the creation of Colossus. Keeping in mind there is a longer story (see Gordon Welchman’s book, The Hut Six Story), notice that a series of a half-million pseudo-random encryptions could now be converted to cleartext by knowing three letters in proper sequence. With the help of some Colossus derivative work Enigma messages could be converted to cleartext as they were fed to the computer, printed on paper, and hand-carried to the interested parties all in less time than it took an Axis commander to decrypt the received message with his Enigma machine. In this case, the mysterious and complex secret devolved into three letters in a specific order. Of course, there was a lot of effort, ingenuity, and dedication involved. Think for a moment. A machine with a randomization interval of a half-million operations could be defeated by simply knowing three letters. Automation (operations) research provided an enormous advantage for the Allies.

Even with understanding, the Enigma process had the potential of being somewhat secure – there were those three letters in sequence. Somehow, regardless of the emphasis it is hard for many people to perceive the importance of seemingly simple things.

It was common for some operators to use their initials, something like AGB on all the messages they transmitted. Long before World War II it was common for radio operators to discern who was sending Morse code by subtleties in the way the coding of the letters was formed, pacing and spacing between letters, where delays occurred, and procedural characteristics.

Colloquially, this was referred to as the sender’s “fist,” envisioning a hand huddled over a telegraph key. From the fist, an analyst could know which of the active operators was sending it, and by records augmented with some detective work, what his initials were. This eliminated the need to use repetitive analysis to unlock the three-letter code and the message could be directly handled by Colossus or a Colossus clone.

Think about this in terms of the passwords you use to restrict access to everything from your health records to an Amazon account. There is no perfect security without adequate randomization, and no convenience in a completely random world. This is one of those areas of practice in which there is room and motivation for fresh thinking, and the need for it has been established by the lessons of history. We have come a long way from Hut 6 in Bletchley Park but remain far short of where we need to go.

Think about this in terms of discovery. Can you find the linkage between what the process does and the parameters about it that you can observe? There must be logic and order (determinism) hiding in there!

Years ago, people would ask me whether cryptography or leak detection (finding a somewhat deterministic event obscured by stochastic disturbances in a nominally deterministic system) was the harder undertaking. Without a doubt, and buoyed by the work, thinking, and inspiration for this kind of journey by Alan Turing and his colleagues, leak detection was a lot easier. That is partly because of the science developed by, and since, their work and because of their inspiration.

How to Optimize Pipeline Leak Detection: Focus on Design, Equipment and Insightful Operating Practices

What You Can Learn About Pipeline Leaks From Government Statistics

Is Theft the New Frontier for Process Control Equipment?

What Is the Impact of Theft, Accidents, and Natural Losses From Pipelines?

Can Risk Analysis Really Be Reduced to a Simple Procedure?

Do Government Pipeline Regulations Improve Safety?

What Are the Performance Measures for Pipeline Leak Detection?

What Observations Improve Specificity in Pipeline Leak Detection?

Three Decades of Life with Pipeline Leak Detection

How to Test and Validate a Pipeline Leak Detection System

Does Instrument Placement Matter in Dynamic Process Control?

Condition-Dependent Conundrum: How to Obtain Accurate Measurement in the Process Industries

Are Pipeline Leaks Deterministic or Stochastic?

How Differing Conditions Impact the Validity of Industrial Pipeline Monitoring and Leak Detection Assumptions

How Does Heat Transfer Affect Operation of Your Natural Gas or Crude Oil Pipeline?

Why You Must Factor Maintenance Into the Cost of Any Industrial System

Raw Beginnings: The Evolution of Offshore Oil Industry Pipeline Safety

How Long Does It Take to Detect a Leak on an Oil or Gas Pipeline?

About the Author

Edward Farmer, author and ISA Fellow, has more than 40 years of experience in the “high tech” part of the oil industry. He originally graduated with a bachelor of science degree in electrical engineering from California State University, Chico, where he also completed the master’s program in physical science. Over the years, Edward has designed SCADA hardware and software, practiced and written extensively about process control technology, and has worked extensively in pipeline leak detection. He is the inventor of the Pressure Point Analysis® leak detection system as well as the Locator® high-accuracy, low-bandwidth leak location system. He is a Registered Professional Engineer in five states and has worked on a broad scope of projects worldwide. He has authored three books, including the ISA book Detecting Leaks in Pipelines, plus numerous articles, and has developed four patents. Edward has also worked extensively in military communications where he has authored many papers for military publications and participated in the development and evaluation of two radio antennas currently in U.S. inventory. He is a graduate of the U.S. Marine Corps Command and Staff College. During his long industry career, he established EFA Technologies, Inc., a manufacturer of pipeline leak detection technology.

Image Sources: 1-Wikimedia, 2-Wikimedia, 3-Wikimedia, 4-Wikimedia, 5-Wikimedia, 6-Wikimedia